Groundhog Day Security

Do you remember “Groundhog Day”? A timeless movie about a cynical weatherman who finds himself trapped in a time loop, reliving the same day over and over again until he learns the value of kindness and selflessness? Well, it turns out that implementing a solid security strategy is also about iteration and improvement over time.

So far, in the first and second parts of these cybersecurity practices series, we have discussed the importance of training and improving team skills, the human factor, and how different teams interact with each other. However, neither of these elements exists in isolation.

Nowadays, we consider security a never-ending loop, integrating traditional development processes from beginning to end, combining continuous integration and continuous delivery with code reviews, vulnerability scanning, and risk assessments, efforts traditionally overlooked and left behind until the late stages of a project.

With frameworks such as the OWASP Application Security Verification Standard (ASVS), or standard awareness lists as the ever-popular OWASP Top 10 Web Application Security Risks, or the CWE Top 25 Most Dangerous Software Weaknesses, we now have a knowledge base of vulnerabilities that should be the baseline in any cybersecurity strategy. Undoubtedly, we have the knowledge, but how do we put it to good use and embed it into our unique lifecycle?

Sun Tzu said, “Know the enemy and know yourself in a hundred battles, and you will never be in peril.” However, is knowledge about raw tactics, techniques, and procedures enough? Can we know about the most commonly exploited vulnerabilities and still be prey to an attacker? Are we just striving for compliance with regulations, or do we seek a unique strategy tailored to the organization’s security needs?

Shifting Security Left

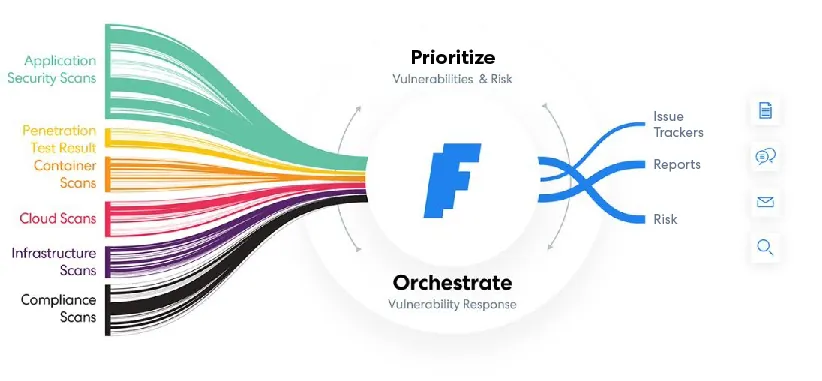

Enabling security as part of the development process means breaking down intricate pipelines into manageable cycles, allowing Dev, Sec, and Ops to cooperate by leveraging the power of automation and continuous integration. A vulnerability management platform working in tandem with security analysis tools lets us normalize, track, and identify potential issues before they become increasingly expensive down the line.

Security is sometimes mistakenly viewed as obstructing a development team’s performance, adding overhead to a complicated, fast-paced discipline. Even with the arrival of agile security methodologies, it still requires flexibility and adaptability from all parties involved to create a culture that’s adapted to the business needs while reserving time and budget for triaging and fixing vulnerabilities.

Identifying and mitigating vulnerabilities at the source offers a tactical advantage in today’s threat landscape. By collecting metrics and logs, we can build comprehensive dashboards that decision-makers can understand and use at a glance, providing a straightforward way of defining evolving business needs without sacrificing security. In addition, we can define a baseline “normal” behavior for our applications, a holy grail that detection engineers can quickly embrace by defining alert thresholds, prioritizing logging events and sources, and escalating newly found issues that otherwise would get lost between regular day noise.

Integrating security as a cornerstone of our operations’ lifecycle is only possible with a transversal mindset of collaboration and integration. All teams and members are responsible for providing a rapid and measured response to issues that arise during continuous and automated testing. However, how do we manage our findings and avoid the ever-dreaded alert fatigue as we gather more information?

Shifting Security Right

Shifting security to the earliest stages of development aims to detect vulnerabilities right from the start. This is an excellent idea in theory but hardly achievable without flaws in the real world. That’s why we often consider runtime and production environment analysis equally important.

When we shift security to the end of the development lifecycle, we gather information from actual usage, get logs from deployment mishaps and misconfigurations, and can detect other black-swan events that can only be replicated when complex application architectures begin to function as a single entity. With the arrival of cloud-native distributed applications, we need observability and monitoring when dealing with containers, microservices, and a whole myriad of building blocks that auto-scale and are dynamic by nature.

Threat actors are constantly looking for novel ways to exploit vulnerable systems, and considering security as a static methodology or something that can be done “just once” won’t be able to deal with the sophistication of persistent adversaries that are part of this changing security landscape. It’s not unusual for Advanced Persistent Threat (APT) actors to take weeks or even months in stages of Discovery, Lateral Movement, Collection, Command and Control, and Exfiltration. Without focusing on real scenarios and risks, we might have a false sense of security by only testing our applications and infrastructure in a controlled environment.

Building a cybersecurity strategy

This three-part series on good cybersecurity practices covers some fundamental items for developing a robust security strategy and architecture. We can highlight automated scanning, automated testing (static and dynamic), hardening, secrets management, Security as Code, and Threat Intelligence. Let’s dive deep into each one, shall we?

– Automated Vulnerability Scanning and Continuous Testing

In the past, countless organizations have naively approached vulnerability management as a one-time activity. In contrast, we now widely accept that repeatability, reusability, and consistency are three key elements of a healthy DevSecOps approach. An ongoing process of detecting and validating vulnerabilities, prioritizing and addressing them according to the severity defined, is recommended and necessary.

– Secure Coding – SAST, DAST, and beyond

As the development process evolves and different components in our pipeline come together as a single build, we rely on Static Application Security Testing (SAST) to catch trivial (but dangerous) software weaknesses. This white-box testing methodology helps us analyze and identify potential vulnerabilities in our source code as early as possible. Even when developers are properly trained and aware of the most critical security risks, as the code base grows bigger, it becomes increasingly difficult to maintain a constrained attack surface without relying on automated testing and scanning processes. As important as SAST, we have Dynamic Application Security Testing (DAST), a black-box methodology that aims to mimic the behavior of a malicious user. We observe how our application behaves when encountering unexpected input at runtime by simulating commonly known attacks and using sophisticated techniques such as fuzzing.

As important as SAST, we have Dynamic Application Security Testing (DAST), a black-box methodology that aims to mimic the behavior of a malicious user. We observe how our application behaves when encountering unexpected input at runtime by simulating commonly known attacks and using sophisticated techniques such as fuzzing.

– Application and Host Hardening

By reducing the attack surface, we limit the attacker’s possibilities and force them to attempt more elaborate and noisier techniques. Approaching hardening as a multi-layered and in-depth matter can give us an early warning and enough time to contain and mitigate a potential compromise before it begins. Any feature in an application that is not needed or production-ready should be removed, along with unnecessary detailed debugging messages or development accounts that had access during initial design and coding.

The same logic applies to host and server hardening, applying the principle of least privilege for all accounts, implementing a clear separation of roles, removing unused services and software, and properly patching our software stack before going prime-time.

– Secrets Management

Secrets must be accessed or generated on demand, and specific authentication methods must be previously audited. Whether it’s an API key, access token, or login information, separating our code logic from confidential information can contain a potential data leak. Long gone are the days when an in-house secrets management solution is needed, with all major cloud providers offering synchronized and encrypted secrets management.

–Security as Code

In Security as Code (SaC), we define repeatable tests, configurations, policies, and scans that allow continuous monitoring, knowledge sharing, and distributed responsibility. We often define our Infrastructure as Code (IaC) to support SaC in deploying environments faster and more consistently. We make sure all best practices are applied to our environments right from the creation. As pipelines and workflows become increasingly complex, it’s essential to automate the management and provisioning of IT resources.

– Threat Intelligence

Vulnerabilities can remain dormant and undetected for long periods of time. It’s not until a threat actor or skilled attacker exploits them that we sometimes even consider them a risk. We need to understand the current threat landscape, industry trends, and vulnerabilities exploited in the wild by different groups. Not every threat model is built equal, and we need to learn in detail about those threat actors we might face in the near future. We have industry-accepted methodologies, such as MITRE ATT&CK and MITRE D3FEND, that help us emulate specific adversary tactics and techniques, testing our detection capabilities in controlled scenarios.

Good security practices go hand in hand with automation, integration, and collaboration. As dynamic as the threat landscape is, so must our strategy be. With over 26 thousand vulnerabilities reported last year, it’s now more important than ever to shift security from left to right, and then everywhere.

Check out Good Practices in Cybersecurity Part 1 & Part 2

Trainings, red teaming services, or continuous scanning? We’ve got you covered. Reach out for more information.